Wrangling my email with Claude Code

I'm terrible at managing emails/DMs/etc. A lot of times it feels like trying to keep up is a full-time job in and of itself.

Now that I don't have a job I finally have time to figure out a better workflow to manage life. I have a good workflow for writing notes and managing tasks in Bear, but my biggest weakness is staying on top of communication. What happens frequently is I'll have a backlog so big that I end up ignoring it, which is really bad as I miss out on opportunities or important emails.

We now have AI as an incredible tool. I was always too busy to sit down and learn how to leverage AI to transform my life; it's so early and this space is super chaotic. However, I finally have time to figure out new workflows that use AI.

My first attempt was to run my own instance of n8n on fly and setup some workflows for processing email. I got it working (and accidentally spammed my wife with multiple emails every hour, which she is still annoyed by), but I realized right now I don't need automations. I just need new tools to help manage my life.

Next, I turned to Claude Code. I use it all the time while writing code, and I knew there was a way to extend it. So I thought of a specific use case: after announcing I left my job, I received a lot of emails from interested companies. Since I'm still dealing with some burnout, it's been difficult to stay on top of. How can AI help us here?

I thought of a few questions:

- Which email threads should I follow-up on? Are there any important ones that I forgot to reply to?

- How can I more clearly see all these companies to help decide what my next step should be? I want to be able to clearly see a list of companies and info about them

The first step is to give Claude Code access to my gmail.

Writing a Gmail Skill to access email

I've used tool calling before only when interacting with an LLM via an API. How do I give Claude Code, a product, the ability to read my email?

It looked like MCP was the way to do it. Claude Code has an /mcp command to add an MCP server which would provide tools that it could call. However, I've never liked MCP. The amount of work that this would require (setting up and running a server, writing glue code to wire it all up, etc) felt insane. I just want to hit gmail directly from my local computer! Well, I don't want to, I just need a way to tell Claude to do so.

I could write a doc that describes how to do it, including where my OAuth credentials are stored for the Gmail API. Every time I open Claude, I could tell it to read this doc. That would probably work, but annoying to always have to load this into context.

As timing would have it, Claude just came out with Skills which is basically the doc idea, but more automated. A Skill is just a markdown doc with some accompanying scripts which provide new functionality. For Claude Code, all you have to do is put them in ~/.claude/skills and every single Claude Code session is automatically aware of them. So much simpler than MCP.

I created the folder ~/.claude/skills/gmail and added this SKILL.md:

---

name: gmail

description: Allows access to read and search the user's email in gmail

---

# gmail

## Instructions

Script available in the `scripts` folder to help query the user's email:

* `search_gmail.js`: Run this script when you need to retrieve a set of emails from the user's gmail account from a search filter. Simply pass the filter as the only argument.

## Examples

User: which emails have the filter "companies"?

Claude Code: <run `node search_gmail.js label:companies`>

Then I got Claude Code to write the search_gmail.js script for me which takes a filter expression and hits the Gmail API to fetch the emails.

I also had to setup a project and credentials in google cloud console, of course. Claude Code wrote a setup_auth.js script which ran me through the OAuth flow to grab my authorization code (using the "out of band" redirect flow). Once I had my credentials and authorization code, it was ready to cook:

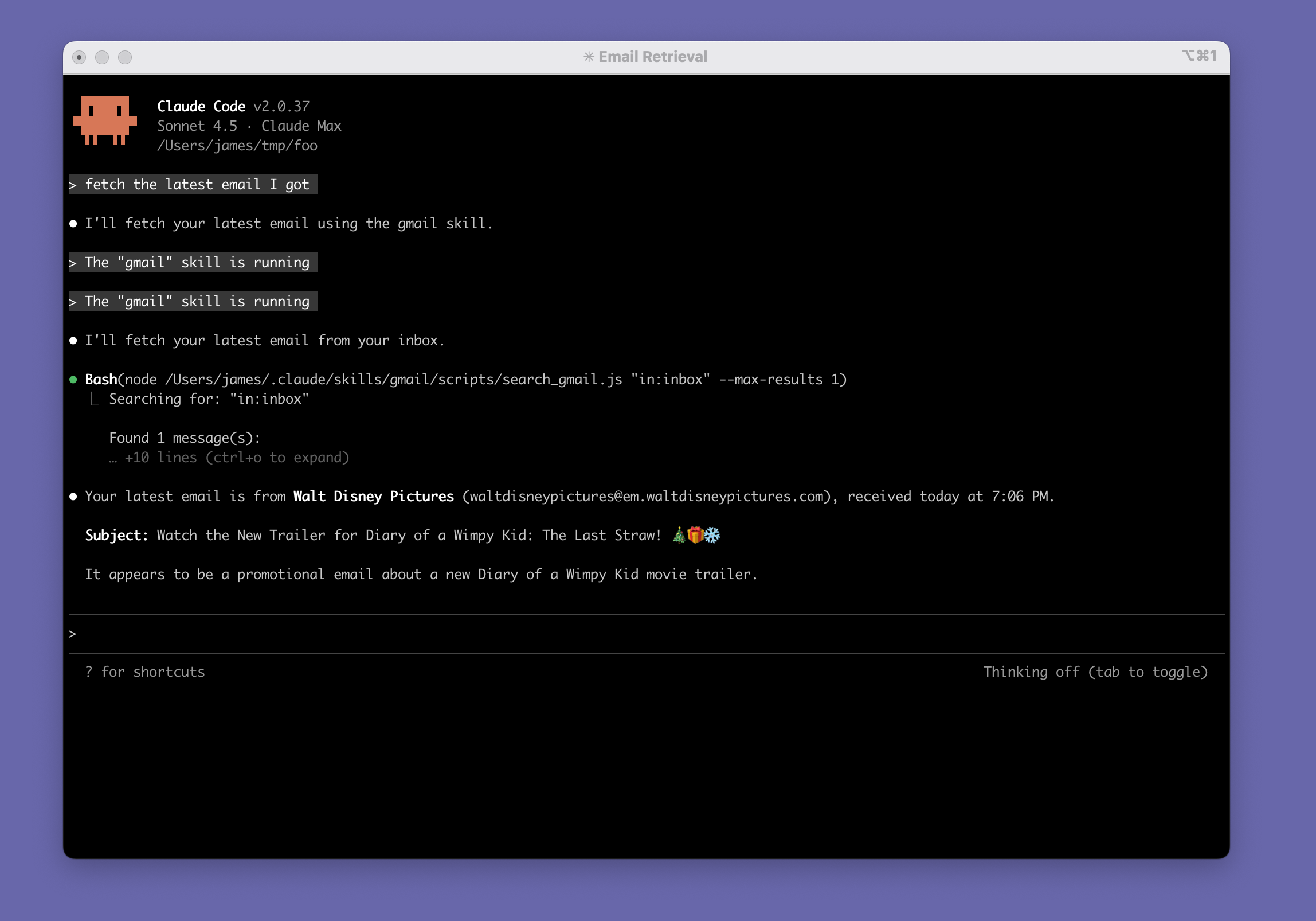

Notice how it says The "gmail" skill is running, and it figured out how to invoke the script with the correct args to get my latest email.

What can we do?

Now that Claude Code knows how to fetch my email, let's ask it some interesting questions.

This is where AI really shines. It's amazing what it can do when you give it a few fundamental pieces. This is currently my favorite use of AI: connecting a lot of disparate data from various APIs together. Normally you have to write tons of boilerplate glue code to do this, but now AI can just figure it out.

I can see why the CRM space (and others) are going to be entirely reinvented because of this.

Watch this video (click to watch it in fullscreen). Here I'm asking it to get the latest 5 emails from someone, and then I'm asking how those differed from previous emails. It was smart enough to fetch the emails in the 6-10 range, compared them, and gave me results:

The comparison is simplistic. You can argue that each individual step here isn't that mindblowing. But the fact that all I had to do was give this tool the ability to fetch emails, and then I can build up more complex questions and it all works is simply incredible.

My original asks

Let's revisit the questions I had at the beginning:

- Which email threads should I follow-up on? Are there any important ones that I forgot to reply to?

- How can I more clearly see all these companies to help decide what my next step should be? I want to be able to clearly see a list of companies and info about them

Let's start with the first one. The first attempt didn't quite work; it searched for emails starting with Re: and used that as a response indicator. While clever, it missed email threads that I had responded to but someone replied back; I wasn't the "last" person to email in the conversation.

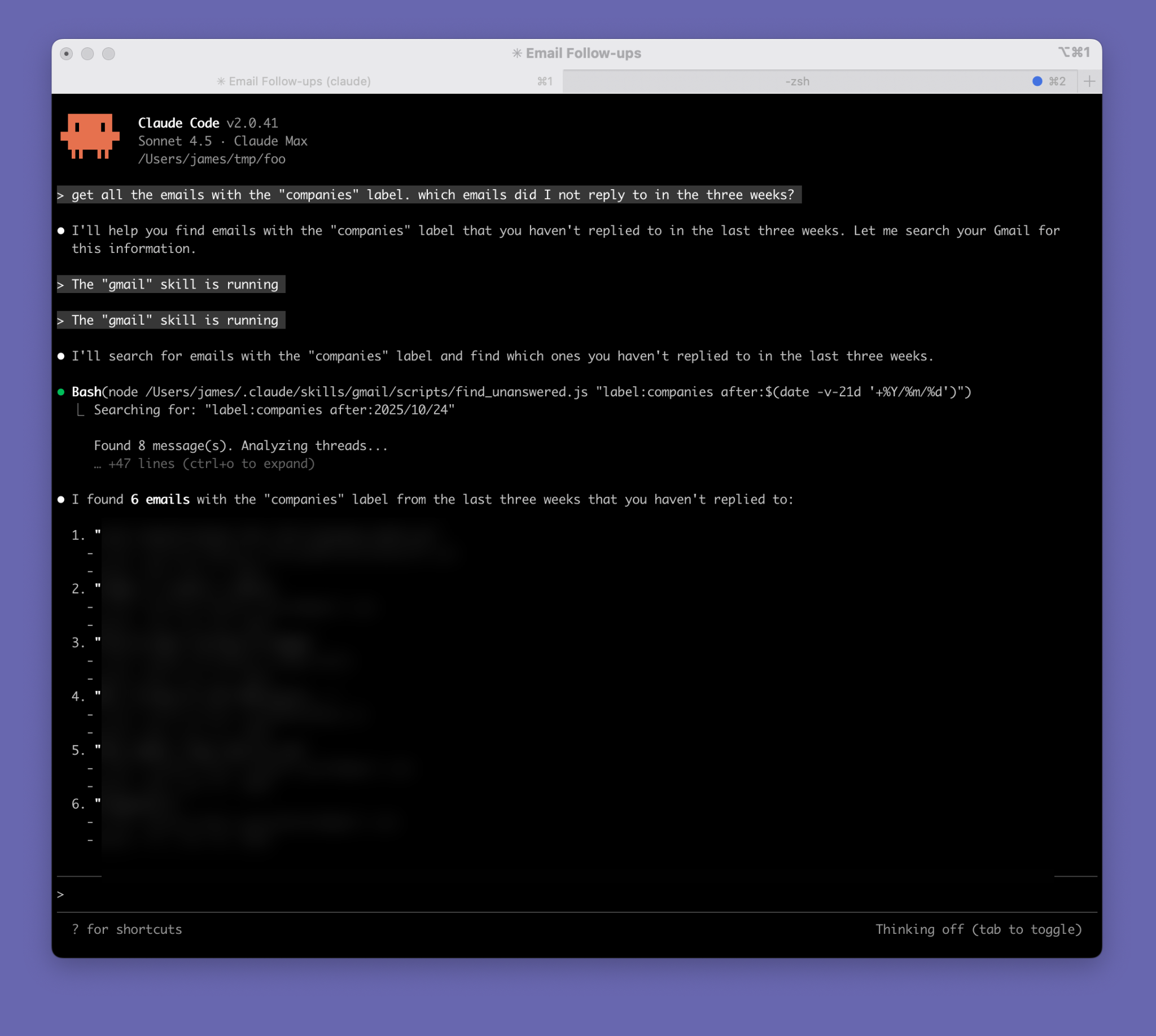

To really get this to work, you need to reconstruct the "thread" from a list of emails. I had Claude Code add a new script to the Skill called find_unanswered.js which did this: it found email threads where I was not the latest person.

With this new functionality, Claude Code worked beautifully. I'm able to ask the question: "get all the emails with the "companies" label. which emails did I not reply to in the three weeks?" and it worked great. It figured out to use the after: filter and the date command to generate a date of 3 weeks ago:

We can now use this functionality to ask more and more questions and cross-reference other emails.

What about my second question? I want to compile info from all these emails and put them in a spreadsheet. I gave it a more complicated prompt to do this:

Go through all the emails with the "companies" label. These emails are discussions with companies that I might want to work for. Go through all of the emails and extract out all of the companies from them. Then, do some research online about each company. When finished, write a CSV file that I can import into a spreadsheet which contains a list of all of the companies, who I was talking to from that company, a summary of the email discussion, and additional information about the company that you found online

I'll spare you the full transcript (especially because it contains sensitive info) but it spent about 5 minutes, got all the emails, extracted out a list of companies, performed a bunch of web searches. Oh, it also needed to get full access to the email content (the list API doesn't give you that) so it wrote a script to do that and used it to fetch the full contents.

It compiled it all into a CSV file that I dumped into a spreadsheet. For obvious reasons I can't share a screenshot of the real spreadsheet, but I got Claude to generate fake data to show you what it looks like:

The more interesting columns that contain the results of the web research:

It's incredible how easy this kind of stuff is now. I know I'm late to the party, but if you're not thinking about how to rethink your workflows with AI you really should be.

What I learned

A few thoughts after experimenting with this for a day. Overall, I'm extremely happy with it.

Context is powerful

It's great that I can give AI a complex prompt (like the one above) and it one-shots it to completion. However, I find it even cooler that you can ask AI to pull in some data, and then continue to work with that data with follow-up questions. This works because the results of a tool call (or script, or API call, whatever gets the data) are kept in the context of a conversation.

This is not very token-efficient so it probably isn't good for large sets of data. But it just works so well that it's so convenient to just include it in the context. For example, I can get it to pull in 10 emails and then continually refine my questions about those emails and it all just works.

This reminds me of REPL-driven development, something much more popular in the ~late 2000s. In a way a REPL is a conversation with code. You type some code, press enter, it evaluates it, and shows you the result. Rinse and repeat. You can build up "context" by declaring variables. You can always inspect data from previous steps, and keep refining until you're satisfied.

The problem with REPLs is it becomes unwieldy for anything complex. You don't want to type a large function in a REPL, and it's easy to forget variables you declared earlier. The steps you got to a certain point aren't clear. Still, I always loved this style of development. Notebooks (like Jupyter) are essentially a modern-day REPL version of it.

This makes me want to build something in this space: imaging a REPL or notebook that allowed you to switch between natural language and code seamlessly, and they both share the same access to a serializable heap. Hmmm.

Skills are great

It's just so easy and incredible to drop a bunch of markdown files and scripts locally into my Claude Code instance that gives it superpowers. I can't wait to keep augmenting it.

One weird issue with skills

I ran into one strange behavior. I accidentally ran claude inside my gmail skill. Well, it was intentional to get it to write the skill but then I forget that it was running in that directory.

Getting it to use the skill inside the directory skill was weird. It seemed to work at first. In fact, I thought the Claude was smart enough to add a new script to the skill because it required new functionality. But it turns out that I was just running Claude in that directory, and it did it's normal thing of writing a script so it can run it. It wasn't intentionally trying to augment a skill.

Things started to fall apart though. I would change scripts inside the skill, or change the SKILL.md file to change how the skill worked, and Claude Code just seemed to flat out ignore it. For example, I asked it "get the latest 5 emails" and I realized it was fetching all 100 emails first (the max in my script) and then filtering which was slow. I added a --limit arg to the script, but no matter what I did, it always passed the arg as --max-results. I wrote out a huge section in SKILL.md to yell at it to never do that and use --limit, but it never obeyed.

I grepped and discovered that the gmail API calls the arg --max-results, so it's probably inferring it from the gmail API. But why could I not instruct it to do the right thing?

This happened with several other behaviors; it simply seemed to be ignoring my instructions.

Turns out you shouldn't expect skills to work if you are running Claude inside the skill itself. Once I ran Claude Code in a different directory everything worked great. For some reason, it seemed to ignore the SKILL.md when running inside the skill itself. In fact, now that I think about it, I never saw the message "The gmail skill is running" so I think the issue was that it wasn't running the skill it all. Because it saw local scripts that seemed to achieve the same thing, it chose to run those scripts directly instead of the skill. Sadly, it did a bad job inspecting and learning the right API to run those scripts.

Definitely not a big issue, and I'm still extremely happy with how this turned out.