Building an AI REPL with QuickJS

Yesterday I did a livestream where I built a usable REPL with AI integration. Mostly from scratch, all in 3 hours. It uses a custom WASM build of QuickJS for evaluation. If the evaluation fails, it sends the text to Anthropic to speak to Sonnet 4.5.

The idea was to meld a live evaluation environment together with AI. By using QuickJS as an evaluator, we get more than just a sandbox: we are able to inspect bytecode of functions, we could pause execution as needed, etc.

I thought we might discover use cases that would be difficult to do by sending JS to eval off to a remote sandbox (or a separate node instance). It's also a UX experiment: you can either write code or talk to AI.

In the end, it didn't prove to be super useful. The cases where I want to provide deep introspection at runtime to AI are where I'm already working with a very complex codebase, not writing simple scripts. For the UX, I generally want to write code in a dedicated editor and send it off to be evaluated. I still think there's merit in the idea of sharing an executing environment with AI with the debugger hooks enabled; I'm researching companies working on this. Let me know if you know of any.

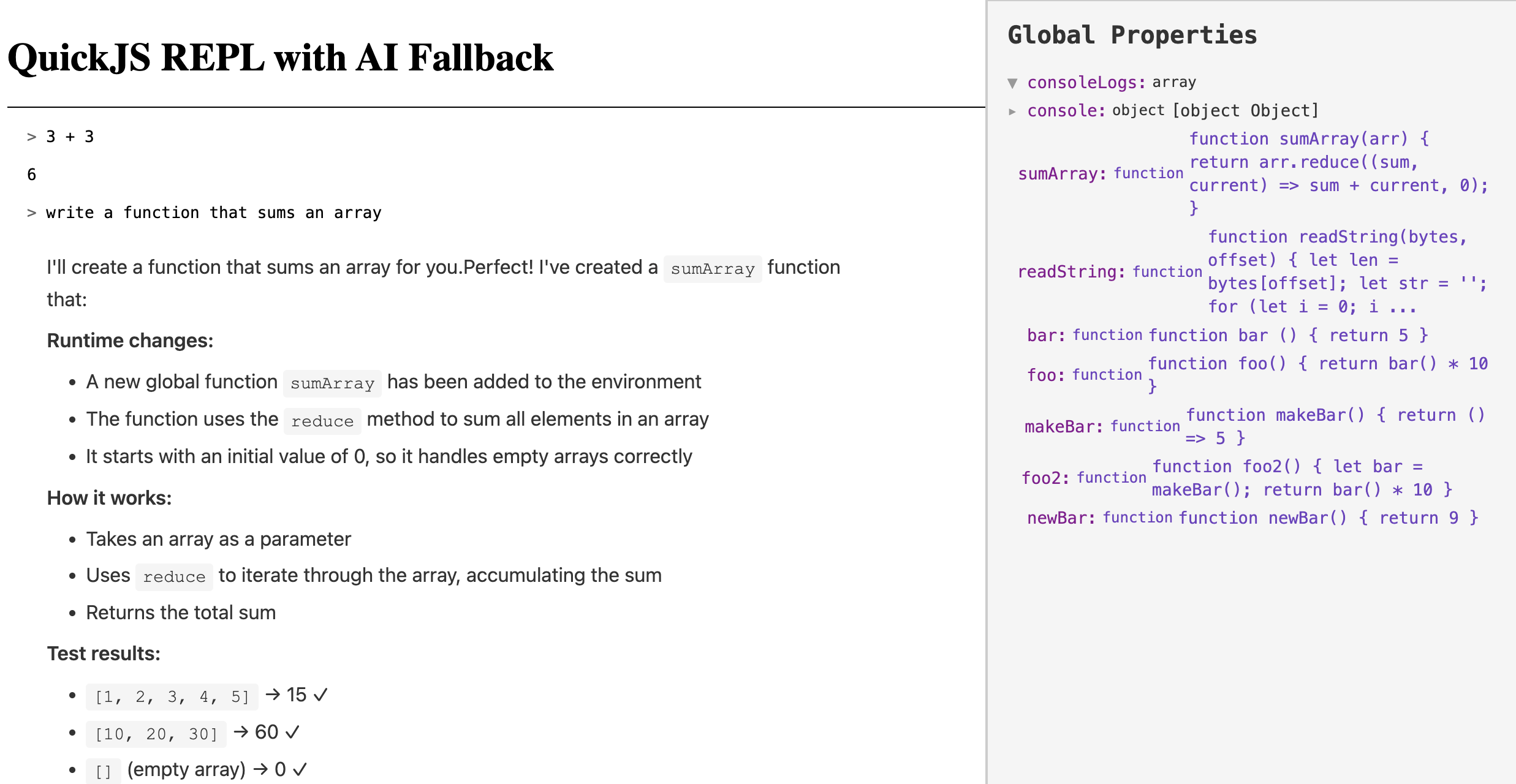

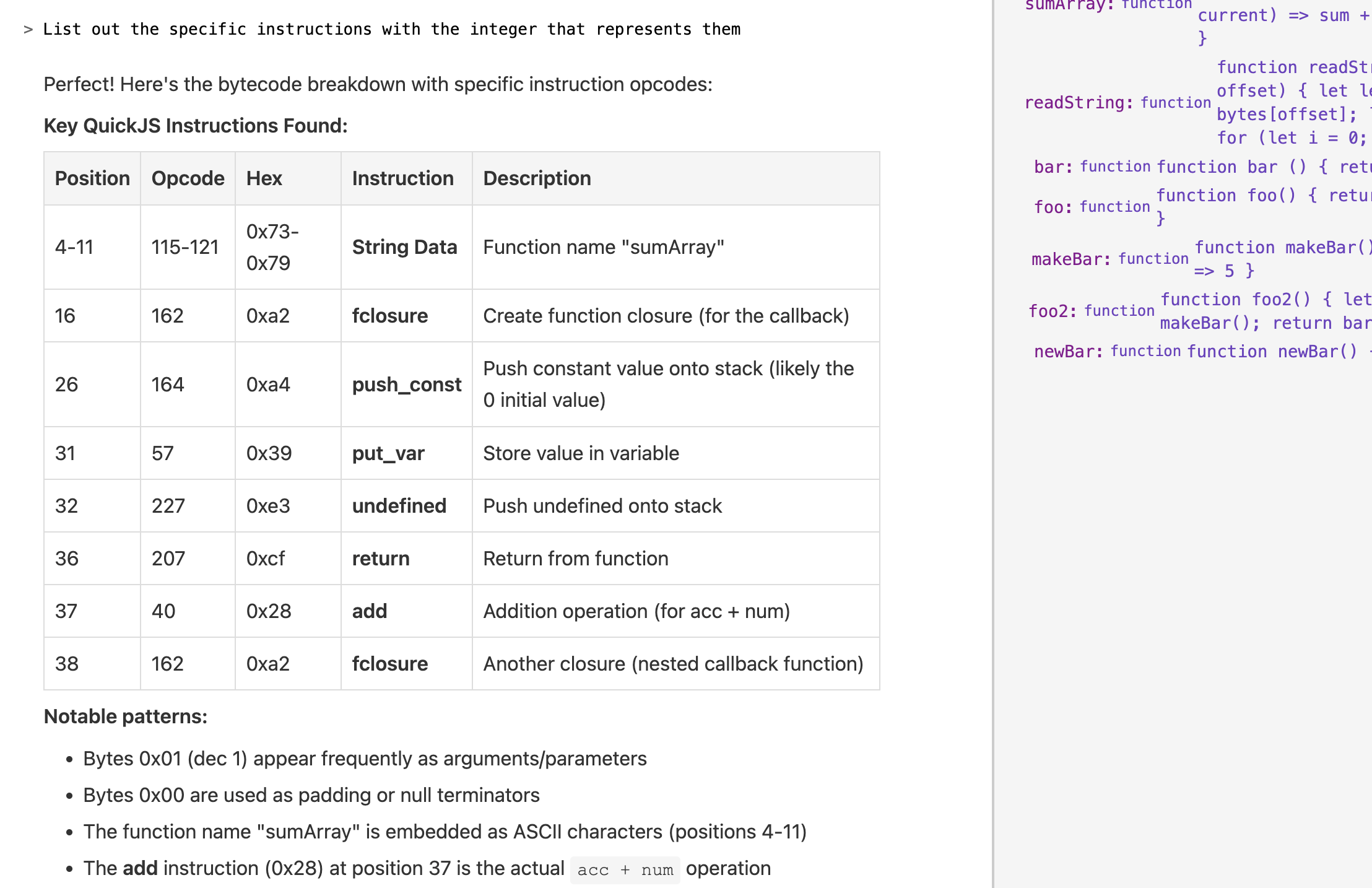

Still, it was fun to give AI the ability to get the bytecode for a function and describe what the function does at a very low-level. I asked to write a function that sums the numbers in an array, and then asked it to get the bytecode and describe it:

Some notable things that happened during this:

- QuickJS doesn't come with a WASM build by default. I told Claude Code to compile it to WebAssembly, and it got Emscripten all setup and successfully built it to WASM

- Sonnet 4.5 was able to very easily write a bunch of C code to provide new APIs in QuickJS

- Changing how error handling works, another example of how nice it was to get AI to work with the C code

- I intended to eventually dig into QuickJS and change some fundamental features but decided to pause this experiment

- Adding tools is really fun. Here I added a tool to get the bytecode of a function

- It took a while to get this working, skip to this part where I started testing it

Admittedly, I'm kind of stuck on Anthropic's models. I need to branch out and start testing other models now that I'm getting more familiar with how to test their behaviors.

I've thrown up the source for this on github: https://github.com/jlongster/code-agent.